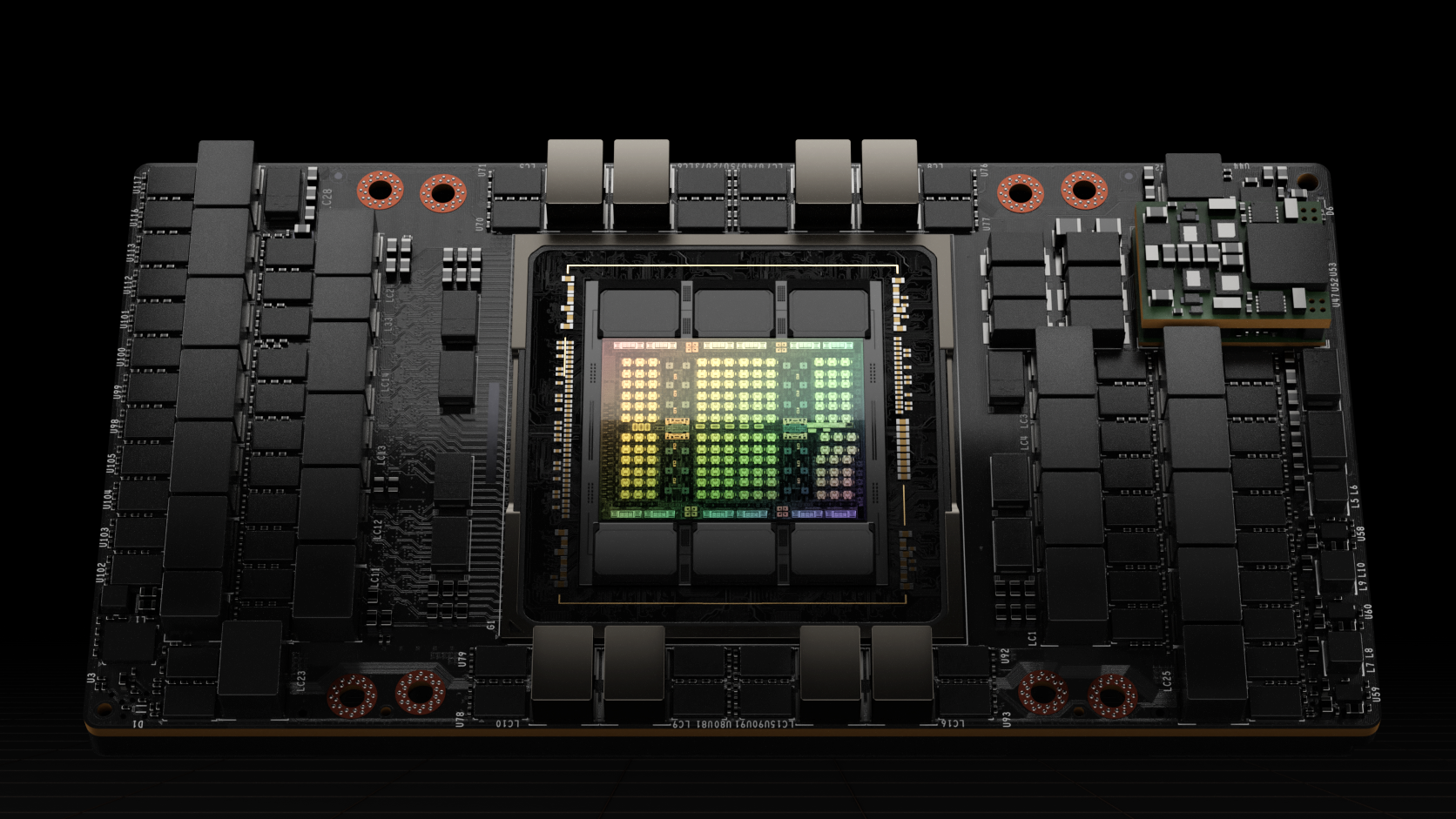

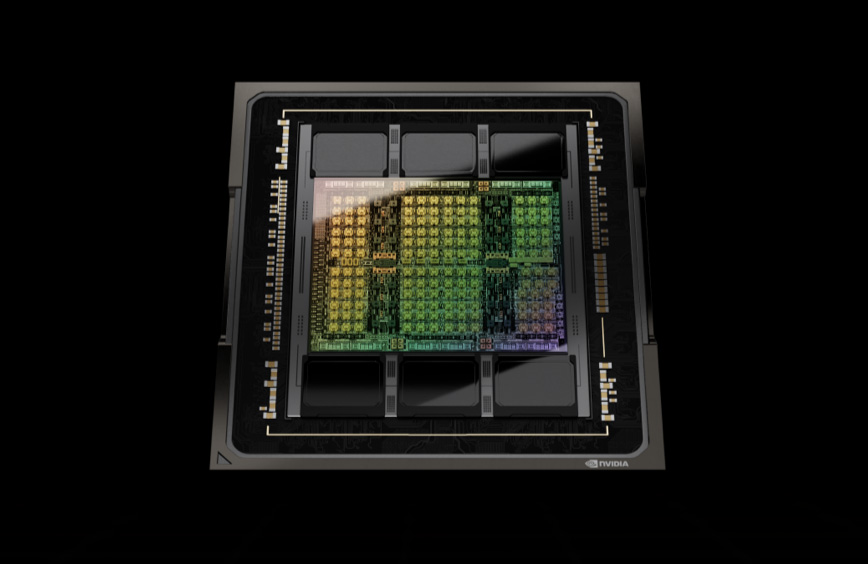

FlashAttention-3 is a recent advancement in optimizing attention computation within large language models (LLMs), leveraging Nvidia Hopper GPUs, specifically the H100 and H800 models. Attention, a pivotal component of transformer-based architectures in LLMs, facilitates understanding relationships between tokens in input sequences. However, as LLMs scale to handle longer sequences, attention computation becomes increasingly computationally intensive, posing a bottleneck.

Previous iterations, FlashAttention and FlashAttention-2, made strides in optimizing attention computations on Nvidia GPUs, but optimizations did not fully utilize newer hardware capabilities like those in the H100 GPUs. FlashAttention-3 builds upon these predecessors by effectively utilizing features unique to Nvidia Hopper GPUs, enhancing matrix multiplication throughput, optimizing data movement across memory segments, and improving efficiency in low-precision operations.

The key innovation of FlashAttention-3 lies in its scheduling of operations to overlap computation with data movement, thereby reducing GPU idle time. It also interleaves matrix multiplications and softmax operations to prevent computation bottlenecks. Additionally, FlashAttention-3 introduces optimized procedures for quantized models, mitigating potential accuracy losses associated with reduced precision.

Optimizing Attention Computation with FlashAttention-3 on Nvidia Hopper GPUs

Performance tests indicate FlashAttention-3 achieves up to 75% GPU utilization on Nvidia H100, resulting in a notable 1.5–2x speedup compared to its predecessors for both training and running LLMs. This efficiency enhancement significantly reduces the time required for LLM training, facilitating experimentation with larger models and datasets.

The benefits of FlashAttention-3 extend beyond speed: it enables LLMs to process longer input sequences efficiently, expanding applications in long-form document understanding and many-shot learning. Moreover, by maximizing GPU utilization, FlashAttention-3 lowers the cost of deploying LLMs in production, potentially reducing the number of accelerators needed.

The open-sourcing of FlashAttention-3 under a permissive license underscores its accessibility and future integration into popular deep-learning frameworks like PyTorch and Hugging Face Transformers. This integration aims to democratize access to enhanced LLM performance, fostering further innovation in model capabilities and optimization techniques across various hardware architectures.

FlashAttention-3 represents a significant advancement in attention computation optimization for LLMs, promising substantial performance gains, cost efficiencies, and expanded model capabilities in real-world applications.