A team of international researchers has developed Live2Diff, an AI system that transforms live video streams into stylized content in near real-time. Named for its capability to process video at 16 frames per second on high-end consumer hardware, Live2Diff represents a significant advancement with applications ranging from entertainment to augmented reality experiences.

This innovation was achieved through uni-directional attention modeling, a novel approach pioneered by scientists from Shanghai AI Lab, Max Planck Institute for Informatics, and Nanyang Technological University.

Traditionally, video AI has relied on bi-directional temporal attention, which necessitates access to future frames and precludes real-time processing. Live2Diff circumvents this limitation by maintaining temporal consistency using only past frames and initial warmup data, thus enabling smooth, immediate transformations of live video content.

Live2Diff – AI Transforms Live Video into Real-Time Stylized Content

This breakthrough was detailed in a paper published on arXiv, showcasing its potential to revolutionize fields like entertainment and social media.

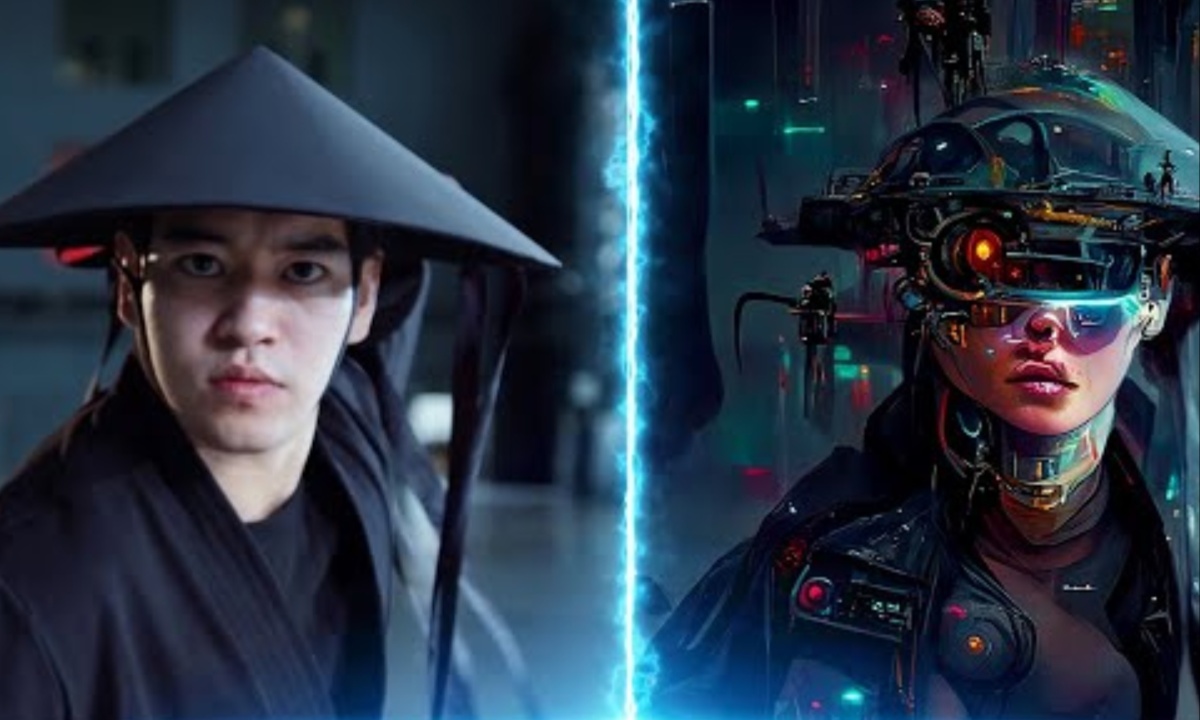

The system’s practical applications were demonstrated through real-time transformations of live webcam footage, turning human faces into anime-style characters and other stylized variations. Extensive testing validated Live2Diff’s superior performance in temporal smoothness and efficiency compared to existing methods, underscoring its viability for widespread adoption in creative industries.

Beyond entertainment, Live2Diff holds promise for enhancing augmented and virtual reality experiences. Enabling instantaneous style transfers in live video feeds, it could facilitate more immersive interactions in virtual environments. This capability opens doors for applications in gaming, virtual tourism, and professional fields such as architecture and design, where real-time visualization of stylized environments could inform decision-making processes.

In summary, Live2Diff represents a groundbreaking achievement in AI-powered live video translation, offering new avenues for creativity and enhancing user experiences across various domains. Its uni-directional attention modeling approach not only overcomes technical barriers but also expands the possibilities for dynamic, real-time content generation in the digital age.