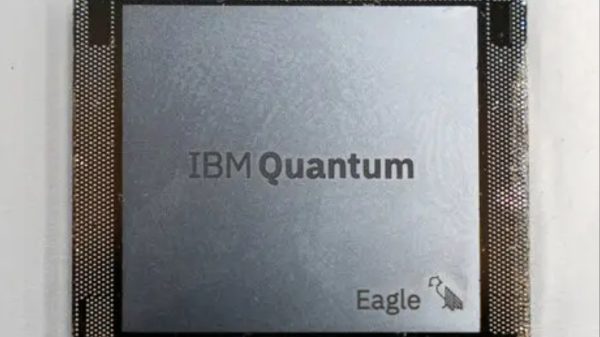

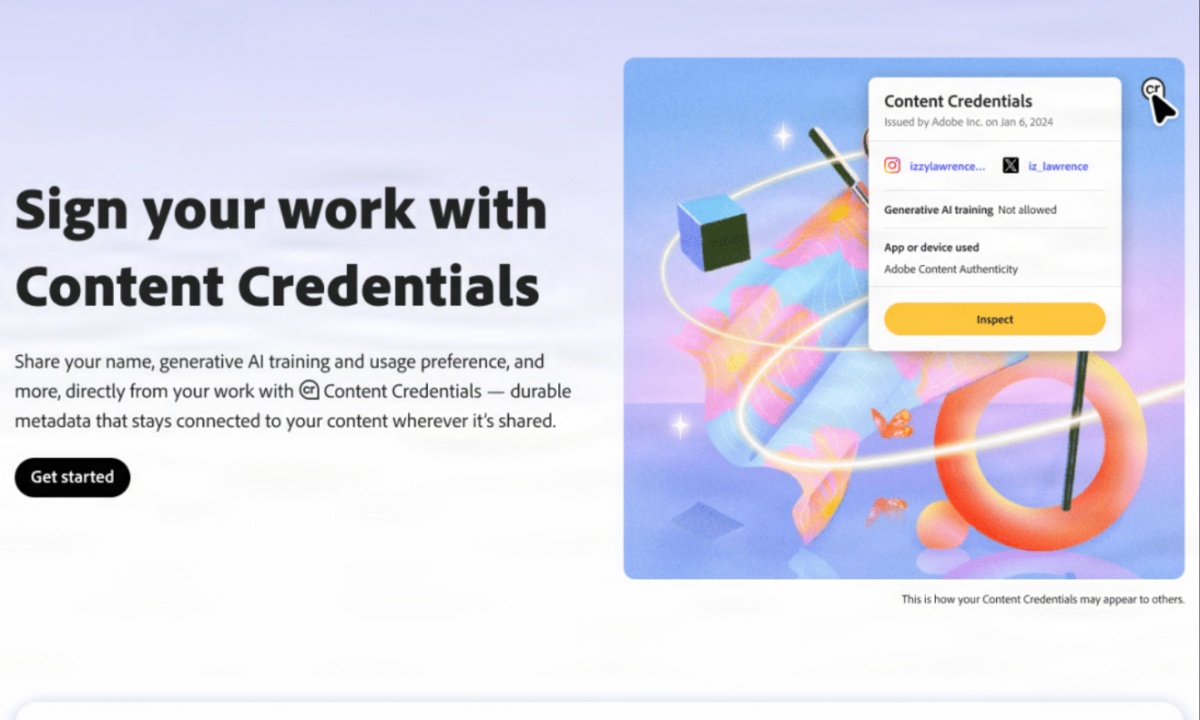

Adobe has launched a new tool, Adobe Content Authenticity, designed to help artists protect their work from being used in the training of generative AI models. This web app allows creators to explicitly signal that they do not consent to their content being scraped from the internet for AI purposes.

In addition to this, artists can embed “content credentials,” such as verified identities, social media handles, or other personal information, essentially giving their work a digital signature. This initiative provides artists with more control and transparency over their creations in the digital space.

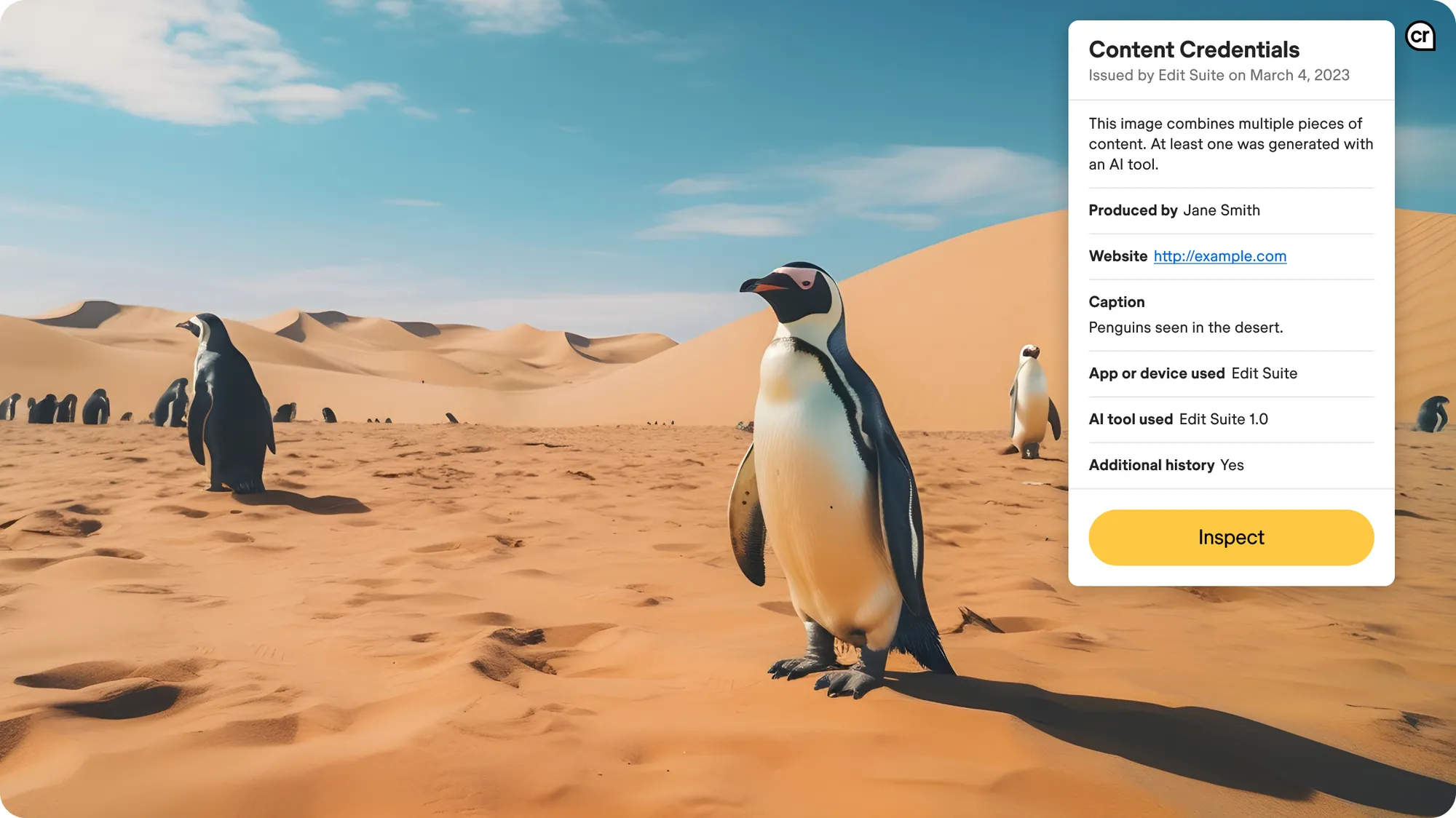

The tool is built on the C2PA protocol, a cryptographic system designed to securely attach metadata to digital content, ensuring that the origin of the work is traceable. Adobe has already integrated this feature into products like Photoshop and its Firefly generative AI, but the new Adobe Content Authenticity app allows creators to apply these credentials regardless of the tools they use to create their work.

A public beta of the app is expected in early 2025, and it marks an important step toward making this technology more widely adopted across the internet.

Adobe Launches Content Authenticity Tool to Empower Artists Against AI Exploitation

Experts, including Claire Leibowicz from the Partnership on AI, see Adobe’s move as part of a broader effort to empower creators in the face of AI advancements. She believes that the initiative is a significant step toward giving artists more control and opening a cultural dialogue about AI and copyright.

However, she remains skeptical about whether the “Do not train” signal will be respected by companies using AI models, noting that the effectiveness of these tools depends on broader industry adoption and enforcement.

The Adobe Content Authenticity tool is joining a growing set of technologies aimed at protecting artists from unauthorized use of their work by AI companies. For example, tools like Nightshade and Glaze, developed by researchers at the University of Chicago, offer artists methods to insert “poison” into their images, making them unusable by AI models.

Adobe also offers a Chrome extension that checks for existing content credentials on websites, further reinforcing its effort to help creators safeguard their intellectual property online. This increasing array of tools signals an ongoing battle between artists and AI companies over the use of creative work.

While Adobe’s tool offers a layer of protection, it’s not foolproof. The company acknowledges that no watermark or cryptographic system can be 100% secure, and while the credentials can follow the content across the web, they may still be stripped by determined parties.

Adobe’s chief technology officer, Ely Greenfield, cautioned that these tools are primarily designed to prevent unintentional stripping of metadata rather than malicious actions. The tool also comes at a time of complex relations between Adobe and the artistic community, following backlash over Adobe’s terms of service, which led artists to believe the company might be using their work for generative AI training without consent.

Adobe has clarified that it does not use user content to train its AI models, but concerns over consent and ownership continue to simmer within the art world.